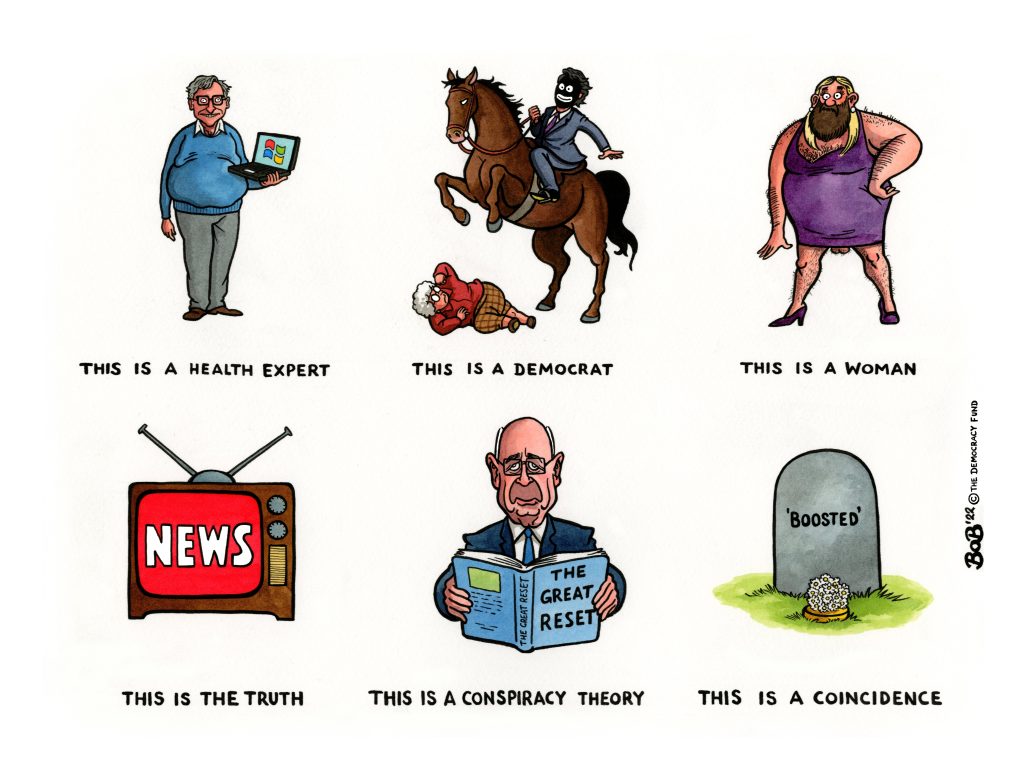

As the global censors circle, it’s worth remembering that life is for living. Screw their fake ‘safety’.

Credit: Bob Moran

Kiwi journalists were advised in late March about the arrival of US news-ratings agency/fact-checker NewsGuard to Australia and New Zealand, via media and PR database company, Telum.

The disinformation industry, also described as the Censorship Industrial Complex, or Big Disinformation has been slowly gearing up over the last three years, but it’s really getting it’s boots on now.

NewsGuard makes money from licensing its website ratings to advertisers, in principle so they can select ‘safe’ sites on which to advertise – until NewGuard came along, no-one had any idea how to judge these things, apparently. The NewsGuard browser extension is also built into Microsoft’s Edge browser.

The rankings agency’s third largest investor is Publicis Group, one of the world’s largest and most influential public relations firms. Yes, a global PR firm funding news rankings … hmmm.

Writing in Mint Press News in 2019, investigative journalist Whitney Webb reported that NewsGuard was lobbying for its news site rankings to be installed by default on computers in US public libraries, schools, and universities as well as on all smartphones and computers sold in the United States.

Four years later this agenda is well advanced. After Telum announced the arrival of NewsGuard to our shores, it did a Q&A with a couple of NewsGuard executives. Like The Disinformation Project, they are targeting all kinds of sectors in the business of information, not just journalists.

Here’s what’s on offer:

- Reliability Ratings help advertisers redirect ad spend away from purveyors of misinformation that do not align with their brand safety standards and towards sources of quality journalism.

- Data, including its ‘Misinformation Fingerprints’ dataset of machine-readable false claims circulating the internet, equips technology platforms and content moderation teams with intelligence to protect users from online harm and control the spread of misinformation.

- Reliability Ratings assist reputation management professionals and media monitoring platforms in protecting their clients from misinformation.

- Reliability Ratings guide aggregation services as they work to curate news and information from publishers of the highest calibre that adhere to their standards of credibility and transparency.

- Data equips defense and military personnel with tools to identify and avoid disinformation.

- Data power research studies misinformation, electoral integrity, media literacy, and other aspects of online news consumption habits.

- NewsGuard’s browser extension and associated media literacy materials provide schools and libraries with resources that help guide learners of all ages through the overwhelming landscape of online news and information.

- Public libraries can access NewsGuard’s ‘pro-bono media literacy programme’ – thanks to support from Microsoft, by downloading its the NewsGuard browser extension to library computers

With all their bases covered, the organisation is clearly serious about gaining a monopoly on truth, and isn’t meeting resistance in legacy media. After all, NewsGuard employs ‘real’ journalists to analyse news site’s credibility.

Prophetically, Webb noted in her 2019 piece: “… as NewsGuard’s project advances, it will soon become almost impossible to avoid this neocon-approved news site’s ranking systems on any technological device sold in the United States. Worse still, if its efforts to quash dissenting voices in the US are successful, NewsGuard promises that its next move will be to take its system global.”

And here we are.

We’re also told in the Telum Q&A that, “NewsGuard has already made significant inroads into the generative AI landscape, demonstrating the potential for more transparent, balanced results that enable users to understand the news sources consulted in a given AI result.” This means NewsGuard datasets are probably being used to train AI such as ChatGPT.

As you’ve probably picked up by now, NewsGuard is a private sector censorship outlet that aims to retrain all of us to think that there is just one valid perspective – the one it tells us. It is of course a complete subversion of democratic sensibilities and the social contract.

But Big Disinformation is coming at us in other ways too. In fact everywhere you look at the moment, those concerned with the information sphere are screeching about the threat of disinformation, and how to get sceptical publics to trust ‘authoritative’ voices in science and journalism again. What’s notable is that they never examine their own behaviour as a possible causative factor in declining trust.

Telum recently advised journalists that the The Bertha Foundation, a social justice documentary funding organisation, is focussing its yearly Bertha Challenge on the topic of ‘disinformation and climate change’.

It will ask applicants to investigate how disinformation is manipulating and distorting the impact of the human-caused climate crisis, and how disinformation campaigns help to protect corporate interests, the political status quo and allow for further industrial corruption.

The second question is quite salient. I too would like young documentary makers to scrutinise the disinformation coming from the private and public sectors, and their collusion, on a range of issues- such ‘Big Disinformation’, the mass poisoning of our planet and people from industrial waste, agricultural chemicals and toxic medicines, or the current move away from representative government to global governance, for example – these would be worthy investigations.

But the lens entrants are being given is fully loaded against dissenting voices and alternative narratives. Aspiring young documentary makers are stymied, right from the get go, from doing great investigative work. They are told what is taboo, what is beyond challenge.

Meanwhile, the Rory Peck awards for freelance journalists, in principle work free from institutional gatekeeping, are funded in part by the Google News Initiative. Sewn up.

And lastly, the International Science Council is “preparing for Crisis X”, in a discussion paper due to be released later in July. It asks, “Can newsrooms and the scientific community overcome sceptical publics?”

According to a survey the ISC ran with media outlets, journalism and science risk being held hostage to algorithms due to the “platformisation” of news and are vulnerable to social media content moderation systems that reward “extremism, conspiracy theories, and disinformation”.

The expert quoted, the director of the Centre for Journalism and Liberty at the Open Markets Institute, Courtney Radsch said, “We must cultivate systems, institutions, and norms that enable quality and useful information to flourish and address the interplay between the technological infrastructure in which information and media systems are embedded”.

Is that just a word salad for algorithmically dictated censorship according to elite preferences?

In the United Kingdom, the Online Safety Bill is back in parliament this week – and while there are many problems with it, an urgent issue is getting it amended to save end-to-end encryption. As currently drafted it will give media regulator Ofcom powers to make WhatsApp and Signal install government approved software on personal phones to scan private messages – you guessed it, for the public’s ‘safety’.

In New Zealand, the Department of Internal Affairs has proposed its own version of this bill (as are all major western democracies currently) – a new online regulator to police what you and me can say on social media platforms, blogs, podcasts and even email lists with 25,000 subscribers or more – all without Parliamentary scrutiny. Read the DIAs discussion document here. Public consultation is open until 31 July.

But rather than police information and ‘cultivate systems that enable quality information’ – doublespeak for the global censorship apparatus – a better idea might simply be to clean up the corruption and capture of politicians, medicine, science, journals and universities.

We could encourage journalists to once more go out into the field to generate news stories and speak to people of all ilks and opinions, present various views and arguments, and simply leave the public to decide for itself what is true and what is not through robust public discourse, instead of heavy handed authoritarian governments doing it for us.

Screenshot: The character Hector P. Valenti , from the children’s film ‘Lyle, Lyle, Crocodile’ takes a more lighthearted approach to life.

This infantilising attitude, by which we are treated as incapable of sniffing out truth through reason – a combination of intuition and logical inference – and the frankly duplicitous inclination towards ‘protecting’ us from the ugly unsafe world … well. Safety is the perennial excuse of tyrants for their tyranny. Remember covid?

The public should reject all of this wholeheartedly. As it moves into our schools and local libraries, when our employers offer us workplace training in how to spot misinformation, or how to deal with ‘extremists in the workplace‘, when the legacy media claims it’s factchecking a dissenting voice, when ChatGPT confirms institutional bias – in fact we should be on guard whenever anyone uses the pernicious terms ‘misinformation’ and ‘disinformation’ and treats them as a serious threat to be dealt with (and that’s not because they don’t exist – the real purveyors of disinformation are the very ones accusing everyone else of it).

A recent children’s movie contained some truly excellent sage advice we might follow instead.

In Lyle Lyle Crocodile, the oily but lovable stage performer, Hector P. Valenti says to an overly serious child: “‘Safe’ is a repugnant little word, and should be expunged from your vocabulary immediately. Life is for living … and living is risky business”.